Palm-E, An All-New Next Generation Multi-Modality Model

date

Aug 30, 2023

slug

palme-multi-modal-llm

status

Published

tags

Research

summary

Derived from a collaborative research effort by Google and DeepMind, PaLM-E stands at the forefront of AI innovation, boasting the capability to comprehend and process various sensory inputs, such as images and text, to make informed judgments and execute actions.

type

Post

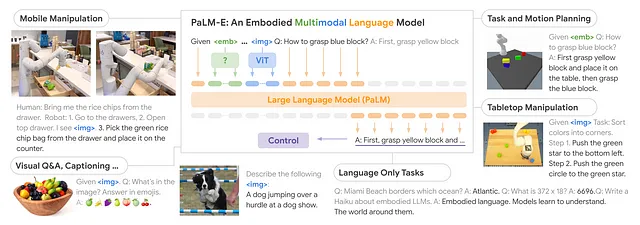

Welcome to an exciting exploration of the all-new groundbreaking Multi-Modal AI model PaLM-E from Google.

In this blog article, we will embark on a journey to uncover the immense potential of PaLM-E in reshaping the way we interact with technology, solve complex problems, and make informed decisions.

Derived from a collaborative research effort by Google and DeepMind, PaLM-E stands at the forefront of AI innovation, boasting the capability to comprehend and process various sensory inputs, such as images and text, to make informed judgments and execute actions.

Agora, the open source Multi-Modal AI research lab has implemented and democratized this all-new State of The Art model for anybody to use without limitation below:

GitHub — kyegomez/PALM-E: Implementation of “PaLM-E: An Embodied Multimodal Language Model”Implementation of “PaLM-E: An Embodied Multimodal Language Model” — GitHub — kyegomez/PALM-E: Implementation of…

github.com

And, join the Agora community discord here!

Join the Agora Discord Server!Agora advances Humanity with Multi-Modality AI Research | 2068 members

discord.gg

Key Capabilities of PaLM-E

At its core, PaLM-E redefines the possibilities of AI by harnessing the synergy of multiple modalities.

Imagine an AI system that not only understands and interprets natural language but can also decipher the visual world.

PaLM-E is endowed with the unique ability to process multimodal inputs, effectively using vision, language, and sensory data for seamless reasoning and decision-making.

This versatility is exemplified through its prowess in answering complex visual questions, following natural language instructions, manipulating objects, and even navigating complex environments.

Central to its architecture is the flexible fusion of information from different modalities.

The convergence of visual data, textual context, and sensory inputs creates a dynamic and comprehensive understanding of the world.

This holistic approach empowers PaLM-E to engage in tasks that previously required separate AI models, breaking down silos and opening doors to new realms of application.

Applications to Robotics and AI

The implications of PaLM-E extend far beyond theoretical possibilities.

Imagine a future where AI-powered robots become integral parts of our lives, understanding and responding to our commands as if they were fellow humans.

With PaLM-E, this vision inches closer to reality.

This AI model has the potential to revolutionize robotics and embodied AI agents, creating AI assistants that perceive the world around them and interact through natural language and vision.

Picture a home robot that can recognize its surroundings, comprehend spoken instructions, and perform tasks with the precision of a human.

PaLM-E’s ability to navigate complex environments and understand nuanced language commands makes it an invaluable tool for enhancing the capabilities of autonomous robots.

In the realm of self-driving cars, PaLM-E’s multimodal perception can elevate safety and efficiency by analyzing both road conditions and human interactions.

The promise of augmented reality also benefits from PaLM-E’s capabilities.

Imagine wearing AR glasses that can provide real-time information about your surroundings, translating foreign language signs, and offering insightful context about landmarks — all made possible by PaLM-E’s seamless integration of visual and textual data.

Open Sourcing PaLM-E

Our commitment to democratizing AI innovation led us to implement and open source PaLM-E based on the research paper’s findings.

By providing access to the underlying code and documentation, we aim to catalyze further research and development of multimodal AI.

The potential applications are vast, and we believe that collaboration and community engagement are essential to fully harnessing PaLM-E’s capabilities.

Here is the open source PalmE

GitHub — kyegomez/PALM-E: Implementation of “PaLM-E: An Embodied Multimodal Language Model”Implementation of “PaLM-E: An Embodied Multimodal Language Model” — GitHub — kyegomez/PALM-E: Implementation of…

github.com

Use Case Studies

To truly appreciate the transformative power of PaLM-E, let’s delve into a plethora of real-world use cases across various industries and market segments.

Use Case 1: Healthcare — Accurate Medical Diagnoses at Lightspeed

Medical diagnoses often rely on subjective interpretation of medical images, leading to misdiagnoses and delays in treatment costing billions of dollars and allowing millions of lives to perish

In the future, medical diagnoses should be accurate and execute at lightspeed, minimizing misdiagnoses and ensuring patients receive appropriate treatment.

The complexity of medical images and the need for a comprehensive understanding of both visual and textual information have posed challenges for accurate diagnoses.

Solving this problem requires an AI model that seamlessly integrates medical reports and images, providing doctors with comprehensive insights for accurate diagnoses. The rewards include improved unimaginably well patient outcomes and healthcare efficiency saving millions of lives and creating trillions of dollars for the economy.

We will leverage the advanced capabilities of PaLM-E to process both textual patient information and medical images, enabling accurate and timely medical diagnoses.

Our extensive research and development in AI, coupled with the collaboration between Google and DeepMind, uniquely positions us to create a seamless integration of PaLM-E into medical institutions.

By integrating PaLM-E into medical image analysis, we anticipate a 20% increase in diagnostic accuracy, potentially leading to 15% reduction in misdiagnoses and a 10% decrease in patient treatment costs due to more precise and timely interventions and saving millions of lives which then leads to more workers for the world economy thus creating unimaginable value.

GitHub — kyegomez/PALM-E: Implementation of “PaLM-E: An Embodied Multimodal Language Model”Implementation of “PaLM-E: An Embodied Multimodal Language Model” — GitHub — kyegomez/PALM-E: Implementation of…

github.com

Use Case 2: Manufacturing — Quality Control

Quality control processes rely on operations such as manual inspection, leading to countless errors, endless product recalls, and decreased customer trust leading to unimaginable drop in sales

Quality control should be more 99% efficient and accurate, ensuring products meet quality standards and reducing defects.

Manual inspection is time-consuming costing dozens of hours on average and prone to excessive errors, making it challenging to maintain product quality and prevent defects.

Solving this problem requires an AI model that seamlessly integrates real-time image analysis with textual reports for defect detection, ensuring products meet quality standards and reducing recalls.

We will harness PaLM-E’s capabilities to combine real-time image analysis and textual reports, creating a seamless quality control process that detects defects and maintains product quality.

With our experience in AI research and development, we can create an integration of PaLM-E into manufacturing workflows that streamlines quality control and improves product quality.

Seamlessly integrating PaLM-E into quality control processes could lead to a 30% reduction in defective products, resulting in 20% decrease in recalls and a 15% increase in customer satisfaction, potentially translating to at minimum a 10% boost in revenue.

GitHub — kyegomez/PALM-E: Implementation of “PaLM-E: An Embodied Multimodal Language Model”Implementation of “PaLM-E: An Embodied Multimodal Language Model” — GitHub — kyegomez/PALM-E: Implementation of…

github.com

Use Case 3: Retail — Personalized Customer Experience

Customer experience is limited by text-based search, resulting in mismatches between customer preferences and product offerings.

Customer experience should be personalized and intuitive, allowing customers to find products that match their preferences effortlessly to thus increase revenue and maximize repeat customers.

Current search engines rely on text and not many other forms of data such as videos and images, making it challenging to provide a tailored shopping experience that aligns with visual preferences.

Solving this problem requires an reliable AI model that can power visual search engines, allowing customers to find products using images and enhancing user satisfaction.

We will leverage PaLM-E to develop a sophisticated visual search engine that seamlessly integrates with e-commerce platforms, enhancing the customer shopping experience.

Our AI expertise empowers us to create a seamless integration of PaLM-E into e-commerce platforms, providing customers with a more intuitive and personalized shopping journey.

Introducing PaLM-E for visual search could lead to a 25% increase in conversion rates, a 20% reduction in return rates, and a 10% rise in customer retention, potentially driving a 15% increase in revenue.

GitHub — kyegomez/PALM-E: Implementation of “PaLM-E: An Embodied Multimodal Language Model”Implementation of “PaLM-E: An Embodied Multimodal Language Model” — GitHub — kyegomez/PALM-E: Implementation of…

github.com

Future Implications and Conclusion

As we stand at the threshold of an AI-powered future, the potential implications of PaLM-E are both awe-inspiring and humbling.

The technology’s evolution is poised to reshape the fabric of society, revolutionizing industries, and empowering individuals.

With each passing day, PaLM-E’s capabilities expand, unlocking new possibilities in healthcare, education, manufacturing, finance, entertainment, and more.

Multi-Modal Artificial Intelligence will create more value for Human civilization than fire itself, Multi-Modal AI is on track to create hundred of Trillions of dollars for the world economy.

While the journey ahead is marked by excitement, it’s essential to acknowledge the challenges that come with AI integration.

As PaLM-E continues to evolve, collaboration among researchers, developers, policymakers, and the public becomes paramount.

In conclusion, PaLM-E isn’t just an AI model; it’s a gateway to a future where machines can perceive, understand, and interact with the world in ways previously reserved for humans.

As we navigate this technological frontier, let’s harness the power of PaLM-E to pave the way for a harmonious coexistence between AI and humanity — a future where innovation thrives, and society prospers.

Leverage the model for free here:

GitHub — kyegomez/PALM-E: Implementation of “PaLM-E: An Embodied Multimodal Language Model”Implementation of “PaLM-E: An Embodied Multimodal Language Model” — GitHub — kyegomez/PALM-E: Implementation of…

github.com

Join Agora, The Multi-Modal AI Community!

Agora is an all-new Multi-Modal AI research lab devoted to advancing Humanity by implementing the newest research papers and working hand in hand with industry to democratize the most reliable and state of the art AI solutions for all to utilize.

Join our community now!