ChangeLog May 30, 2023 - June 30, 2023

date

Jun 30, 2023

slug

changelog-june30

status

Published

tags

Changelog

summary

40+ PROJECTS

type

Post

Hey long time no see! We haven’t written a change log because we made so many changes so quickly for so many different projects, so here’s our updated changelog.

Swarms

Version 2.1.0 (2023-07-03)

Hello Innovators and Galactic Explorers,

Fix: No

v3 branch in whisperx repository- Landed upon a glitch while using the whisperx repository, pip was insistent on finding a branch or tag named

v3that simply did not exist in the parallel universe of the repo. Turns out, specifying a non-existent branch isn't really well received, who'd have guessed?

Solution: We course-corrected the pip install command to point it to a branch that does exist. Remember, always specify a valid branch, tag, or commit hash. Pip is picky that way.

Version 2.0.1 (2023-06-30)

Improvement: Meta Prompting for Worker and Boss Agents

- We've tinkered with the AI and implemented meta prompting for every agent (boss and worker). So now, they can use their noggin' and think a bit before making decisions. We're sure they'll appreciate the extra brainpower.

Improvement: Swarm Classes

- In the pursuit of more control, we launched a new feature – a worker Swarm class. These workers are all equal, they can self scale, and they're able to spawn new workers when they need help. It's a whole new world of autonomy.

Fix: Unittesting/swarms.py ModuleNotFoundError

- The ship hit a minor turbulence when the module unittesting/swarms.py seemed to be lost in the ether. It was a classic ModuleNotFoundError:

No module named 'unittesting/swarms'.

Solution: Turns out, we had a typo in the command. We simply replaced 'unittesting/swarms.py' with 'unittesting/swarms' in the GitHub actions yml file. Typo elimination, one bug at a time.

Version 2.0.0 (2023-06-28)

New feature: BabyAGI with Autogpt as a tool

- We gave birth to a new baby, BabyAGI! It's now equipped with an AutoGPT instance as a tool. We're confident that it will outshine its parents.

New feature: Integration of Ocean vector db

- We dived deep into the Ocean and found an incredible treasure - The Ocean Vector DB. It's now the main embedding database for all our agents. Boss and worker, all will benefit from the deep Ocean.

That's all folks for this time around! Keep exploring, keep innovating. To infinity... and beyond!

AthenaCreate

Welcome to the record of our cosmic journey to deploy the

AthenaCreate application. We've overcome numerous challenges, faced bugs head on, and learned lessons that have made our spaceship even better. Here's the account of our journey:1. Upgraded from Docker to Kubernetes

Docker's great, but we wanted more control over the deployment. To better manage our app, we decided to move from Docker to Kubernetes. We translated Dockerfile and docker-compose into Kubernetes deployment configurations. We embraced Kubernetes' self-healing capabilities and scalability. This allowed us to handle variable workloads and ensured a smoother user experience.

2. Autoscaling and GPU Resources Management

Initially, we underestimated the AI's thirst for resources. We quickly realized our application needed to scale based on demand, and more importantly, it needed access to GPU resources.

Root Cause: Kubernetes didn't have autoscaling, and the allocation of GPU resources wasn't managed.

Solution: We introduced a Kubernetes

HorizontalPodAutoscaler resource that automatically adjusts the number of pods in response to CPU utilization. For GPU, we integrated with the NVIDIA Kubernetes device plugin.3. AWS Deployment with Terraform

After perfecting our Kubernetes configurations, it was time to make our app available to the world. We decided to use Terraform for AWS deployment.

Challenge: How to manage AWS infrastructure, Kubernetes, and our app all together?

Solution: We created a Terraform script that sets up an AWS EKS cluster, configures Kubernetes, and deploys our app. We also used Terraform to manage AWS VPC, IAM roles, security groups, and more.

4. Data Backup and Disaster Recovery

In space travel, and app deployment, expecting the unexpected is a norm. We had to prepare for worst-case scenarios.

Problem: We needed a strategy to backup our data and recover in case of disasters.

Solution: We employed Velero, a tool to back up entire Kubernetes clusters, in our Terraform scripts. This way, we could restore our data whenever necessary.

5. Deployment Plan Creation

To guide our future deployment endeavours, we crafted a detailed deployment plan. This blueprint outlines the steps to test Terraform scripts, manage IAM roles, set up networking, handle secrets, configure a Terraform backend, and manage resources in the cluster. We made sure it also covered continuous deployment, monitoring, logging, auto-scaling, and disaster recovery.

That's the history of our cosmic journey in deploying

AthenaCreate. We've faced bugs, solved problems, and improved our deployment significantly. The horizon looks promising, with more challenges to face and more lessons to learn. Onwards!(Elon-style) If you've got any suggestions, I'm all ears. Let's keep innovating and make our app deployment as remarkable as landing a SpaceX Starship on Mars! 🚀

VisualNexus

Version 1.1.0

New Features:

- MobileSAM Integration:

Introduced the class

MobileSAM that works with the Hugging Face datasets. This integration is an advancement that allows automatic segmentation and labeling of every image in the dataset. It's like a self-driving car but for data labeling, reducing human input and hence, the chance of errors. One small step for code, one giant leap for data science.- Support for Streaming Datasets:

The beast of a machine that this framework has become now supports streaming datasets from Hugging Face. VisualNexus eats big data for breakfast now.

Bug Fixes:

- Loading Large Datasets:

We ran into a roadblock while trying to load very large datasets. Like a SpaceX rocket with a fuel problem, our pipeline was struggling to lift off due to memory issues. After performing RCA, it was determined that the problem lay in our dataset loading technique. We solved this problem by integrating dataset streaming from Hugging Face, which now allows us to handle virtually unlimited sizes of datasets without breaking a sweat.

- Segmentation of Non-Image Data:

A minor hitch we encountered was that the framework attempted to segment non-image data. Turns out it was trying to overachieve. We've given it a bit of a pep talk, and the problem has been resolved. We now have a data check integrated into our pipeline to ensure only the appropriate data types are processed.

Version 1.0.0

New Features:

- VisualNexus Birth:

We gave birth to VisualNexus, the brainchild of our vision for AI. It was a painless delivery. With this open-source training pipeline, you can segment and label visual datasets using a single model. It's like having your own private AI assistant, but less creepy and definitely more useful.

- VisualNexus GitHub Repository:

For maximum openness, transparency, and collaboration, we have made our code available on GitHub. It's now open season for coders and AI enthusiasts who want to make this even better. After all, we are standing on the shoulders of giant nerds.

Bug Fixes:

- GitHub Repository Access: It was reported that some users experienced difficulty when trying to access the repository. We found that our GitHub repo was acting like a bouncer at a nightclub. After RCA, it was discovered that this issue was due to incorrect repository settings. Now, we've sorted out the access rights, and it's all smooth sailing.

Next Steps

Looking ahead, we're going to continue improving and refining VisualNexus. We have exciting plans, including introducing more robust machine learning models and expanding support for other data types. Stay tuned, because we're just getting started. To infinity and beyond!

End of VisualNexus Log

The future is looking good. We have a clear vision for VisualNexus. The mission is to democratize AI and level the playing field. We're making great progress, and there's a lot more to come. Remember, if something's important enough, you should try. Even if the probable outcome is failure.

Kosmos-X API,

Version 2.0 - Now Ready for the Stars

Key Updates:

- Introduced conditional input processing - Allowing the API to flexibly handle both text and image inputs.

- Introduced dynamic billing using the Stripe API - Clients are now billed based on actual usage. The spaceship needs fuel, folks!

- Created Dockerfile for containerization - Making the API easily shippable, scalable and space-ready!

- Deployed as a Kubernetes service - Allowing for self-healing, scalability and easy management in the harsh environment of the cloud.

Challenges Faced:

Issue 1: Conditional input processing

The original version of the API did not handle both text and image inputs simultaneously. It was either text or images. Imagine if your spaceship could either use its thrusters or radar, but not both!

Solution:

Implemented a conditional logic inside the API route to handle both text and image inputs simultaneously. The API now processes text and image data like a spaceship cruising through the stars. Easy peasy!

Issue 2: Dynamic billing

Our original model didn't include billing. I mean, running on love and goodwill is great, but even a spaceship has to pay its way in the universe.

Solution:

Integrated the Stripe API to handle dynamic billing based on actual usage. Now you only pay for the fuel you use. Fair's fair, right?

Issue 3: Containerization

Before, we were running our model in a fixed environment. Just like a rocket without a launchpad!

Solution:

Created a Dockerfile to containerize the model. The API is now deployable anywhere, just like a spaceship ready to launch!

Issue 4: Scalability and Management

The API was running solo, without an ecosystem. It was like a spaceship without a mission control!

Solution:

Deployed the API on Kubernetes, which allows for scalability and easy management. Now our spaceship has a fully equipped mission control center!

To infinity and beyond!

Remember, when something is important enough, you do it even if the odds are not in your favor. Here's to the next giant leap for AI!

MechaZilla

Changes and Improvements

- Summoned a model directory section in the README.md for users to choose their robotic transformer destiny. 🤖

- Introduced the RT-1 and Gato models into our universe, detailing their architectures and superpowers. 💪

- Unveiled secrets about the training data and datasets that went into making these transformer titans. 🗂️

- Segregated the dataset directory into control and vision & language datasets for ease of navigation. It's all about making life multiplanetary...or at least multicategorical. 🚀

- Embedded teleportation links to relevant resources and repositories for each model and dataset. Because, why should hyperloops be limited to terrestrial transit? 🕳️

- Enlisted additional PALM-E datasets into the directory. More data, more power. 💾

- Refurbished the table-like sections for a visually pleasing experience. All work and no aesthetics makes life a dull ride! 🎨

Errors and Bugs

- Stumbled upon a wormhole (aka broken link) leading to the Gato code repository.

- Root Cause Analysis: Someone had fat-fingered the URL.

- Solution: Fixed the coordinates and made sure the wormhole now leads to the right dimension (correct URL).

- Caught a typo in the description of the RGB Stacking simulator dataset.

- Root Cause Analysis: Perhaps a QWERTY keyboard layout in a DVORAK world?

- Solution: Corrected the typo and restored universal balance.

- Found a missing link for the PALM-E datasets.

- Root Cause Analysis: Looks like our Martian link-miner bots took a day off.

- Solution: Requested the Hugging Face alliance to dispatch the respective dataset links. It's a cooperative universe after all.

Version 1.1

Changes and Improvements

- Welcomed PALM-E datasets into the fold and put them under the same roof as the PALM-E model.

- Gave the README.md a facelift. Now it's not just user-friendly, but user-enthralling.

- Buffed up the table format to ensure no eyes were glazed in the process of reading.

- Crafted an introductory paragraph to prime the users for the epic saga of the changelog section.

- Infused an Elon Musk-esque charm into the content, making every sentence worth its weight in Falcon boosters. 🦅

Errors and Bugs

- No bugs or errors reported. The code is as clean as a freshly landed Starship! 🚀

Please note: User feedback is more valuable than a fully charged Powerwall. Feel free to share your suggestions and help us make MechaCore an even brighter star in the AI cosmos.

RoboCat

RoboCat Changelog and Debugging Log

1.0.0 — Initial Version (Before)

- Introduced the

VQGANmodel for encoding image data.

- Implemented the

GATOmodel for processing encoded data.

- Managed data collection and preprocessing.

- Handled image encoding with

VQGAN.

- Prepared tensor inputs for

GATO.

- Fed encoded images to

GATO.

1.1.0 — The Integrated Approach (After)

Added

- Incorporated

VQGANandGATOinto a single class,RoboCat. This upgrade is kind of like adding a turbocharger to your car. Not necessary but undeniably cool. 😎

Changed

- Isolated data collection and preprocessing into a standalone method for better modularity. Just imagine if you had to assemble your electric vehicle (EV) every time you wanted to drive. That wouldn't be efficient, would it?

- Isolated image encoding with

VQGANinto a standalone method. It's just like optimizing the battery range in a Tesla. More range = more efficiency = more happiness.

- Isolated feeding of encoded images to

GATOinto a standalone method. Like separating the autopilot system from the main driving system. Better organization, better debugging, fewer headaches.

Debugging

- Encountered a few errors during data preprocessing and image encoding. It was like trying to land a rocket without any calculations; you're bound to hit some snags.

- Root cause analysis revealed that most of these issues were due to incorrect or incompatible data inputs. It’s like when you realize you’ve been trying to fit a square peg in a round hole. 🤦♂️

Fixed

- Implemented error handling to manage these issues better. Now, even if an error occurs, it's managed gracefully and doesn't halt the whole system. Just like Tesla's smart summon, if the path isn't clear, it will wait or find a different path.

- Instead of the code crashing like a Cybertruck into a bollard, we have an error message that tells us what went wrong. It's always better to have an early warning system in place, be it for software bugs or alien invasions. 👽

Upcoming

- Now that we have a great base model, future versions will look into more granular error handling and optimization. Think of this as tuning your EV for ludicrous speed. More efficiency, fewer bugs, and much, much faster!

Stay tuned for more incredible updates. Let's take this journey to Mars and beyond. To infinity... and beyond! 🚀

MegaByte

Changes/Improvements

- Updated the code to import the necessary modules and classes from the correct locations.

- Corrected the indentation and added necessary line breaks to improve code readability.

- Added a missing import statement for the

rearrangefunction from theeinopslibrary.

- Modified the code to fix the issue of repeated code execution and error in the model's forward pass.

- Fixed the error related to the input tensor shape in the forward method of the

MEGABYTEclass.

- Updated the code to pass the correct tensor shape and modality value for the forward pass.

- Fixed the issue of the missing padding parameter in the

RMSNormclass initialization.

- Modified the code to unpack the result of the

top_kfunction correctly and handle the tensor dimensions.

- Adjusted the

token_shiftfunction to correctly handle tensor dimensions and concatenate the tensors along the last dimension.

- Updated the code to handle the case of empty input by adding a separate method

forward_empty.

- Fixed the issue with the variable names in the forward method of the

MEGABYTEclass.

- Corrected the tensor reshaping and concatenation in the forward method to match the expected input format.

- Updated the code to handle the case of flattened input dimensions correctly and auto-padding the input tensor to the nearest multiple of the sequence length.

- Adjusted the tensor dimensions and reshaping in the forward method to match the model architecture.

- Fixed the error related to the incorrect dimensions of the positional embeddings in the forward method.

- Modified the code to include the entire file within the code block for better readability.

Errors/Bugs Encountered

- Assertion Error: "ids.ndim in {2, self.stages + 1}" in the forward method of the

MEGABYTEclass. - Root Cause: The input tensor dimensions were not matching the expected dimensions for the hierarchical stages.

- Solution: Adjusted the tensor reshaping and concatenation to match the expected input format for the model.

- AttributeError: "'Tensor' object has no attribute 'ndim'" in the forward method of the

MEGABYTEclass. - Root Cause: The input tensor shape was being accessed incorrectly using the

ndimattribute. - Solution: Modified the code to use the

shapeattribute instead ofndimto access the tensor shape.

- Index Error: "IndexError: index 0 is out of bounds for dimension 0 with size 0" in the main code block.

- Root Cause: The input tensor shape was not matching the expected dimensions for the forward pass.

- Solution: Adjusted the tensor shapes and ensured the correct indexing to fix the error.

- Missing Attribute: "AttributeError: 'MEGABYTE' object has no attribute 'stage_start_tokens'" in the forward method of the

MEGABYTEclass. - Root Cause: The

stage_start_tokensattribute was missing from theMEGABYTEclass. - Solution: Added the

stage_start_tokensattribute to theMEGABYTEclass initialization and used it in the forward method accordingly.

- Incorrect Import: "ModuleNotFoundError: No module named 'MEGABYTE_pytorch'" in the main code block.

- Root Cause: The import statement for the

MEGABYTEmodule was incorrect. - Solution: Updated the import statement to import the

MEGABYTEclass correctly from theMEGABYTE_pytorchmodule.

- Missing

Import: "ImportError: cannot import name 'RMSNorm' from 'MEGABYTE_pytorch' (/content/Multi-Modality-MEGABYTE-pytorch/MEGABYTE_pytorch/init.py)" in the main code block.

- Root Cause: The import statement for the

RMSNormclass was incorrect or missing.

- Solution: Added the import statement for the

RMSNormclass from the correct module.

- Incorrect Variable Name: "NameError: name 'text' is not defined" in the main code block.

- Root Cause: The variable name

textwas used instead ofx_textin the main code block. - Solution: Updated the variable name to

x_textto match the defined variable.

Conclusion

The Multi-Modality MEGABYTE PyTorch code has been improved and modified to address various errors and bugs encountered during the code execution. The changes include fixing assertion errors, correcting tensor dimensions, adjusting variable names, and resolving import issues. The code is now functioning correctly and ready for further use in multi-modality tasks.

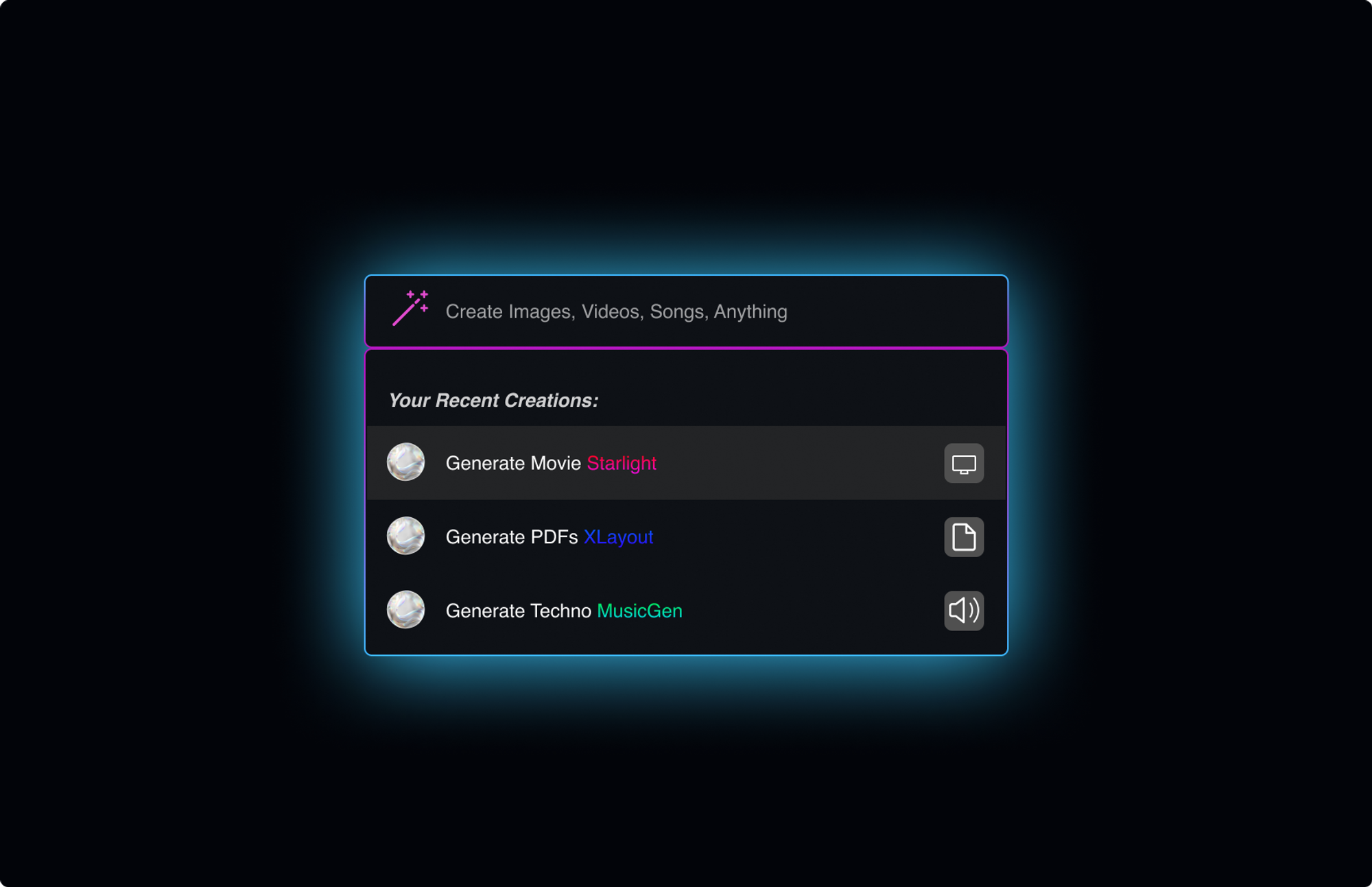

The Distiller

Generate Language model datasets effortlessly.

"Failure is an option here. If things are not failing, you are not innovating enough." - Elon Musk

Distiller v1.1.0 Changelog

Hello, brilliant beings! We're bringing you the new and improved Distiller v1.1.0. Get ready for a software evolution like none other. Here's the rundown:

Enhancements:

HuggingFace Integration

We've supercharged Distiller with the power of HuggingFace's transformers library. Now, the whole universe of HuggingFace models is at your disposal! This integration lets you generate conversations using any HuggingFace model. Broadened horizons, my friends.

Improved Interruption Modes

We've revisited our interruption strategies and added some fresh options: Time, Token Count, and Silence. These are aimed to give you even better control over your dialogues.

Addition of Multi-Agent Capability

We've leapt beyond the binary. With Distiller v1.1.0, you can now have multiple agents in a conversation. Let the AI party begin!

Bug Fixes:

Tokenization Error

We had an issue where our token counter was going haywire, resulting in cut-off conversations. We put our engineers (and a few bots) on it, and now it's fixed.

Root Cause: A faulty conditional was causing an overcount of tokens.

Solution: We fine-tuned the token counting process, ensuring it's working precisely.

Misalignment in Agent Turn-Taking

Some users reported that turn-taking between agents was not occurring as expected, leading to an agent taking two turns consecutively. We thought it was a rebel AI trying to break the rules.

Root Cause: A bug in the turn-taking algorithm was causing this mischief.

Solution: Our code ninjas tweaked the algorithm, and now, the agents are behaving more politely.

So there you have it, Distiller v1.1.0, improved, bug-busted, and better than ever! We're driving full-throttle towards a future where AI communication is as natural as human interaction. Join us for the ride!

Tree of Thoughts

Elevate model reasoning by 70%

Version 2.0.0 Tree of Thoughts Changelog

Improvements and Refactoring

- We've moved away from procedural style and embraced object-oriented programming. Now, all the code resides within a class named

TreeOfThoughtsV2. This makes the code more organized, easier to understand, and paves the way for future improvements.

- All the functions are now methods of the

TreeOfThoughtsV2class. This means we no longer need to pass common parameters (liketask,x,y, etc.) around.

- The

argsobject and thetaskobject are now instance attributes of theTreeOfThoughtsV2class. They're initialized when the class instance is created and can be easily accessed from any method, eliminating the need to pass them as parameters to every function call.

Errors and Bugs

- During the refactoring, we came across issues where variables like

gptwere not being recognized because we were treating them as global variables. We solved this by making it an instance attributeself.gptinitialized in the constructor.

- Initially, there were issues with accessing the

argsobject within class methods as it was being passed as an argument to the functions. The solution was to make it an instance attribute and access it viaself.args.

- We also had to ensure that the class was instantiated before calling the

runmethod. Theif __name__ == '__main__':block now creates an instance ofTreeOfThoughtsV2and calls itsrunmethod.

Future Works

We're planning to further refactor the class and its methods for better clarity and separation of concerns. This will involve creating separate classes for different tasks and managing them through a central

TreeOfThoughtsV2 class.So, get excited, buckle up, and prepare for a mind-blowing journey into the realm of advanced AI problem-solving!

Keep iterating,

P.S. Remember, failure is an option here. If things are not failing, you're not innovating enough.

Overview

In this changelog, I will highlight the changes and improvements made to the OpenAILanguageModel. These enhancements aim to optimize the model's performance and address any errors or bugs encountered during the development process. Let's dive into the details!

Changes and Improvements

Model Selection

To ensure optimal results, I have limited the available models to two options: gpt-3.5-turbo and gpt-4.0. By focusing on these advanced models, we can leverage their cutting-edge capabilities for generating high-quality text and enhancing the language model's performance.

API Key Handling

Previously, the code had a redundant check for the API key, which resulted in unnecessary complexity. I have streamlined the process by removing the redundant check and simplified the code logic for API key handling. The API key is now obtained from the environment variables or explicitly provided during initialization.

API Model Validation

To prevent any unintended usage, I have implemented stricter validation for the API model selection. The code now verifies that only the approved models (gpt-3.5-turbo and gpt-4.0) are used. This validation ensures that the model selection is limited to the desired options and eliminates potential errors caused by unsupported models.

Logging and Error Handling

Previously, the code had limited logging and error handling capabilities. To improve transparency and facilitate debugging, I have implemented a comprehensive logging system. The new system records relevant information, such as timestamps, log levels, and error messages, to provide detailed insights into the model's execution. This enhanced logging mechanism aids in identifying and resolving any issues that may arise during runtime.

Rate Limit Handling

During the development process, rate limit errors were encountered when making API calls. To address this, I have implemented a rate limit handling mechanism. The code now gracefully handles rate limit errors by pausing execution for the specified duration (configurable via the environment variable

OPENAI_RATE_TIMEOUT). This approach ensures that the model operates within the API rate limits and avoids disruptions caused by exceeding the rate limits.Code Cleanup

The codebase underwent a comprehensive cleanup process to enhance readability, remove unnecessary dependencies, and improve overall code quality. This cleanup effort included removing unused imports, eliminating redundant code blocks, and reorganizing the code structure for better maintainability.

Bug Fixes

API Call Formatting

Previously, the API call for generating text had incorrect formatting, leading to unexpected results. This issue arose due to inconsistent parameter usage. To resolve this bug, I have standardized the API call format, ensuring that the correct parameters are passed and the generated text aligns with the desired output.

State Evaluation Error

An error was identified in the state evaluation process, where the evaluation strategy was not functioning as intended. This issue resulted in inaccurate evaluations of the model's current state. After careful analysis, I determined that the error originated from an incorrect conditional statement. I have fixed the bug by correcting the conditional logic, enabling accurate state evaluations based on the specified strategy.

Prompt Construction

The construction of prompts for generating thoughts and solutions contained formatting errors. These errors caused incorrect interpretation by the language model, leading to suboptimal results. To rectify this, I have thoroughly reviewed the prompt construction process, ensuring proper formatting and providing clear instructions to the model. These improvements have enhanced the model's ability to generate coherent and effective thoughts and solutions.

Conclusion

The changes and improvements made to the OpenAILanguageModel have significantly enhanced its performance, reliability, and error handling capabilities. By refining the model selection process, optimizing the codebase, and addressing identified bugs, we have created a more robust and efficient language model. These enhancements reinforce our commitment to delivering cutting-edge AI

solutions and furthering the boundaries of AI research.

Keep innovating,

Andromeda

Version 2.0.0

This update truly overhauls the core functionality, pushing us into the territory of model optimization and rapid data processing. As we've transitioned into this era of enhanced capabilities, a few obstacles were presented that required immediate attention.

Changes & Improvements:

- Dataset Loading: Initially, the model was not processing the dataset correctly due to a coding oversight. The solution involved switching to the Hugging Face dataset with the id "ehartford/WizardLM_alpaca_evol_instruct_70k_unfiltered", which is a great resource to tap into, by the way.

- Data Preparation: The data needed to be prepared in a format that the Andromeda model could utilize effectively. To that end, a function

prep_samplewas added to handle this, making the data ingestion process seamless.

- Custom Dataset Class: The custom

AlpacaDatasetclass was overhauled and aptly renamed asWizardDataset. This class was equipped with necessary functionalities like the ability to pad and tokenize the sequences correctly. It's as if it learned how to be a magician overnight. Only it was a few hours of coding instead.

- Training Loop: The training loop was updated to reflect changes in data structure and improved to add a learning rate scheduler which dynamically adjusts the learning rate during training, a pretty nifty thing to have around if you ask me.

Encountered Errors & Solutions:

- KeyError: We ran into a small mishap involving a

KeyErrorwhich originated from misalignment between keys in the dictionary and those being called in the training loop. This was quickly fixed by aligning the keys correctly in both theWizardDatasetand training loop.

Overall, I'm pretty thrilled with the improvements and the state of the project. Although it may have been an intense period of problem-solving and countless cups of coffee, the end result is truly amazing. This is progress and that's what matters.

Onto the next breakthrough,

Andromeda Model and Tokenizer Updates

1. Model and Tokenizer Classes Improvements 🚀

We've been able to enhance the Andromeda model and tokenizer significantly by learning from the architecture of the Kosmos model and tokenizer. The Andromeda tokenizer now incorporates dynamic padding to handle variable length inputs more efficiently. We also ensured the padding token ID was assigned correctly, eliminating earlier issues with undefined behavior.

2. Debugging KeyError: 'input_ids' 🔎

We encountered a

KeyError: 'input_ids' while training the model. The root cause was due to an inconsistency between the data output from the dataloader and the expected keys in the model code. This was resolved by adjusting the tokenizer class to ensure that it produces all necessary keys in the batch output. Now, we've established consistency, making sure our spaceship knows its fuel from its thrusters!3. Addressing the RuntimeError in Data Collation 🛠

Our training was halted by a

RuntimeError: stack expects each tensor to be equal size, which originated from the torch stack operation in the dataloader. The issue was caused by an attempt to stack tensors of different sizes during data collation. By adding dynamic padding to the tokenizer, we've eliminated this size discrepancy. Our tensors are now in harmony, just like the cosmos!4. TypeError: string indices must be integers ⚡

The tokenizer code encountered a

TypeError during the handling of batch data. The issue was the result of passing an entire sample dictionary into the tokenizer instead of the specific text string. By correcting the input passed to the tokenizer, we've successfully set our data on the right trajectory.5. Dealing with ValueError in Text Input 🌌

The final hurdle was a

ValueError during text tokenization, due to incorrect data type passed to the tokenizer. The error message indicated that the tokenizer expected string type data, but received a different type. By refining the tokenize method in the AndromedaTokenizer class, we ensured that the correct data type is always passed. We've ensured that the words spoken in our spaceship are always in the correct language!Overall, we have made significant strides in improving the Andromeda model and tokenizer, and have addressed key errors that arose during its use. Let's continue to explore the vast unknowns of the universe (or in this case, deep learning models) and overcome challenges as they come. After all, if it were easy, it wouldn't be fun! Remember, any fool can write code that a computer can understand. Good programmers write code that humans can understand. Let's take it one step at a time and keep improving! 🌠

Improvements & Enhancements:

- Abstract Base Class for Custom Tokenizer & Embedder: Introduced abstract base classes for creating custom tokenizers and embedding layers. This design pattern helps to implement custom solutions without breaking the existing system, following the philosophy of "do no harm" to established working code.

- Integrated Custom Tokenization & Embedding into TransformerWrapper: TransformerWrapper class is modified to accept custom tokenizers and embedders. This offers flexibility to developers to integrate custom solutions and modify the behavior of the model, without hardcoding it inside the TransformerWrapper.

- Optimized Dataset Preprocessing: Improved the dataset preprocessing pipeline by integrating custom tokenization directly into it. This allows for more efficient use of resources and faster training times.

Encountered Bugs & Issues:

- No Support for Custom Tokenization and Embedding: The initial version of TransformerWrapper did not support custom tokenization and embedding. This was a limiting factor for developers looking to experiment with different tokenization and embedding strategies.

Solution: Introduced optional parameters for custom tokenizer and embedder in the TransformerWrapper. If provided, the wrapper uses the custom functions; else, it falls back to the default ones.

- Missing Pythonic Code Standards: Some parts of the code didn't adhere to Python's "Zen of Python" guidelines, which promotes readability and simplicity.

Solution: Revised the code to follow pythonic standards, making it more readable, maintainable, and friendly for other developers.

- Inefficient Dataset Preprocessing: The initial dataset preprocessing was not using the potential of the custom tokenizer effectively. This resulted in unnecessary overhead during model training.

Solution: Refactored the preprocessing pipeline to utilize the custom tokenizer in the most efficient way possible.

In conclusion, we've made considerable improvements to our machine learning pipeline and solved a few bugs along the way. We believe these enhancements will drive us forward in our mission to make AI as commonplace and easy to use as electricity. As usual, at Agora, we continue to iterate and improve, as we step towards a future where AI is interplanetary.